Skills

As a balance to my more creative side that you might’ve seen in the “About Me” tab, I’d like to think of myself to have quite a logical mindset. I think both sides have married in my professional life thus far, and I would like to introduce you to that a bit!

As a general overview, I graduated from the University of Toronto with a Specialization in Machine Learning and Data Science. I always knew that I had a knack for math, science, and computers, so it just made sense to enter the world of computer sciences during and after high school.

I have experience(s) in:

- Python (Numpy, Pandas, Sci-kit Learn, Matplotlib etc)

- Timeseries Classification, and Timeseries Forecasting Models

- C++, C

- Neural Networks, Reinforcement Learning, MiniMax Trees, Heuristics

- SQL, R

- Java

- HTML, CSS, Javascript

- Microsoft Office Products (Power BI, Excel, Word, Powerpoint)

- Photoshop and Adobe Products

I've mostly working in Python outside of my university career which I will elaborate on. The other languages have mostly been used in academic settings, but I have done projects in each, nonetheless.

Work Experience

May 2021 - Data Scientist @ Scotiabank

Story

As my first ever co-op experience, I was excited to begin working on the Executive Insights & Analytics Team and get a taste of what being a Data Scientist

really meant. This was my first time dealing with a lot of data and learning about how data is stored and what the general flow of a data science project

looks like. I learned about data lakes, data warehouses, and how to query them for a model. This was also the first machine learning model I had ever officially

helped create. The problem that I had worked on was a binary timeseries classification problem. It was truly interesting to see the power of sufficient data and

being able to model those analytics into digestible dashboards through PowerBI. Wanting to learn more, I also took the opportunity to do a lot of LinkedIn

Learning courses on topics that I found relevant; I completed 14 courses spanning from python packages such as pandas, learning docker, and overall productivity

courses.

More than the technical experiences I had; I was also able to learn from some amazing people. Each person on that team was extremely welcoming and genuinely cared

about helping me learn something new. It was my first time being able to talk to people who are working in the industry I wanted to get myself into. Everyone had

their own story and experiences that I could learn from. Despite spending varied time with everyone, they all had some significant impacts that I will always appreciate.

I truly enjoyed being able to host meetings with them and run our agile ceremonies in fun and interesting ways. They taught me that a good work environment is productive,

fun, and supportive. I hope to help create those types of environments in whichever team I find myself in.

Lastly, I also joined a couple of clubs hosted by some other velocity students. These clubs were called student development groups, I joined the Investment Club,

Cryptocurrencies Club, and Data Science Club. We had presentations every week about specific topics, I was even able to get one of my previous managers in to talk about

real estate investing for the Investment Club. It was a lot of fun to meet others of similar interests and learn from their experiences as well.

Key Take-aways

- Experience in Data Lakes and Data Warehouses

- Curated training and testing data sets

- Contributed to the development of a Python-based classification model assessing payroll

- Implemented state-of-the-art classification model amongst over 3-million customers

- Presented data-driven insights using automated Power BI applications

- Facilitated Agile Scrum Ceremonies such as daily stand-ups, retrospectives, and other team-building activities

May 2022 - Data Scientist @ Scotiabank

Story

I was very fortunate to get another opportunity at Scotiabank, this time participating in a new framework that the Velocity program was trying under the Corporate Functions

Analytics team. This arrangement was called the Velocity Squad. Essentially the idea was that they would take a group of 5 students of various positions to take a problem

from its ideation to a finalized product. This was done once before with success, so we were the next group to give this framework a go. The group was comprised of 1 Project

Manager, 1 Data Engineer, and 3 Data Scientists including myself. This was a very fast-paced environment as we hoped to have a complete product completed by the end of the 4

months. Luckily, we also had mentors along the way and were in constant contact with the business line to ensure that we were at our best potential.

The problem that we were given was a time series forecasting problem, meant to predict values in the future to prepare sufficient supply to ABMs across Canada. We were

essentially automating a job that normally would take hours and a lot of manual effort. I was able to work on every front. I helped prepare sufficient and clean data for

the model, as well, I helped create model functions and metrics, and lastly, I helped with dashboard creation. It was a pleasure to look at historical data and identify

seasonality and trends, finetune pipelines and bridge all the gaps so that each aspect of the model and pipeline ran smoothly and effectively. As a result, we were able

to create a very accurate model that could automatically run every day for the business line.

Through this position I also partook in presenting to very large audiences explaining our process, results, and answering questions. Our project was very successful, our VP

had even suggested that we join a Data Visualization competition. Through our successes with the predictions in Canada, the solution was reused and deployed in many countries

across North and South America. I had also gained a couple friends along the way!

Key Take-aways

- Successfully developed a Python-based time series forecasting model assessing cash supply

- Deployed model across millions of ABMs across North and South America

- Conducted end-to-end processes of the project from ideation to production

- Performed data cleaning and consolidation methods using efficient data pipelines

- Delivered project progress updates and key findings to senior executives monthly

January 2023 - Machine Learning Engineer @ Blackberry

Story

Having previously worked on a project from ideation to production I was excited to hop on a project that has already gone through production; and a few iterations at that.

This team was one of the smallest that I had ever worked on as they only consisted of 3 people without co-ops. They often referred to themselves as operating like a start-up,

and upon working with them I understood why. Everyone was very well acquainted and specialized in different aspects and they were able to piece together to create the projects

that they had. I really enjoyed working with each of them and learning how they came to the expertise that they have.

There were multiple projects that I was working on which all overlapped with each other as well. The main machine learning project that I was working on was for log anomaly

detection. It was interesting to look at what had already been built and test it to determine what could be improved or changed. As well as making adjustments, I completely

refactored and restructured the code base as well ensuring to keep/improve model performance. Since the code base consisted of work from over and around 7 other previous interns,

I had a clear goal to make the project consistent. This was not only for myself, but other interns who would onboard after me. These efforts helped optimize the code overall. I

also worked on implementing other libraries to the code to make it more simplistic and cohesive in comparison to other projects.

Key Take-aways

- Restructured and streamlined a main codebase to enhance organization and cohesion

- Created a complete package based on existing models to executed in conjunction with other projects

- Tested against existing anomaly detection model features and methods to determine efficacy

- Performed data cleaning and consolidation methods using efficient data pipelines

- Showcased progress through presentation on multiple tasks on a weekly basis

University Career

Within my university career I learned a lot of foundations to the world of machine learning that I am exposed to today. I’ve taken machine learning algorithms courses, machine learning theory courses, statistical methods courses, and of course much more. Below I outline the most impactful course projects I’ve completed.

January 2022 – Mouse vs. Kitties Game

- Heuristics Search

- MiniMax Trees

- Reinforcement Learning

Over the span of a few months, I had the opportunity to apply various machine learning algorithms to a mouse in a simple cat and mouse chase game. In its most simplistic form, the

game consisted of a mouse that is meant to run through a maze away from a kitten and towards cheese. This would be elevated by adding up to 4 kittens with adjustable intelligence

and multiple cheese targets that are randomly spawned.

Each algorithm was taught theoretically then I had to implement it within the project alone. All algorithms kept in mind various features of the situation such as the size of the

maze, the number of kittens, the number of cheeses, and the distances relative to the maze walls.

The first algorithm I learned was heuristic search. This included creating different heuristic equations to optimize the path of the mouse. The types of searches that I implemented

were Uniform Cost Search (UCS) and Heuristic A* Search. Both were rooted in a cost which was estimated by the heuristic equations I created.

The next algorithm that I learned was Minimax Trees. This was an interesting approach as it was based off the idea of building a valued tree based on every possible step of the game.

Obviously doing this through a full game is extremely impractical so it was tapered down using a utility function to quantify cost. In addition, I implemented alpha-beta pruning to

ensure that we aren’t doing unnecessary computation.

The last algorithm that I learned for this game was Reinforcement Learning. This has always been my favourite algorithm when it comes to machine learning since its foundations are so

humanistic. It’s the idea of rewarding “good” behaviour or punishing “bad” behaviour and allowing the agent (the mouse) to learn from those observations. It creates a policy to help

it map the different states of the game and determine optimal actions.

Overall, on each of these sectors I received a good mark and learned a lot about these foundational concepts along the way.

April 2022 – Optical Character Recognition

- Neural Networks

- Metrics

This project in particular was quite interesting as it had to do with looking at images and determining the letter that it is using neural nets. This was the first project where I

was able to see the usage of common root functions that I’ve studied in my theory courses. These functions were the Threshold Activation function, Logistic function, and Hyperbolic

Tangent function; after seeing these for years and never understanding the connection, this was quite enlightening.

In terms of the networks, I implemented single layer and multi-layer networks. After each pass through the network weights were adjusted to reduce error. The model was trained for

a set number of rounds and stopped if the squared error is only decreasing. Separately there was cross validation set up to determine improvement on new data. Lastly, I also implemented

batch updates to assist in preventing over-fitting.

Overall, I also received a good grade on this project and took my first step closer to deep learning.

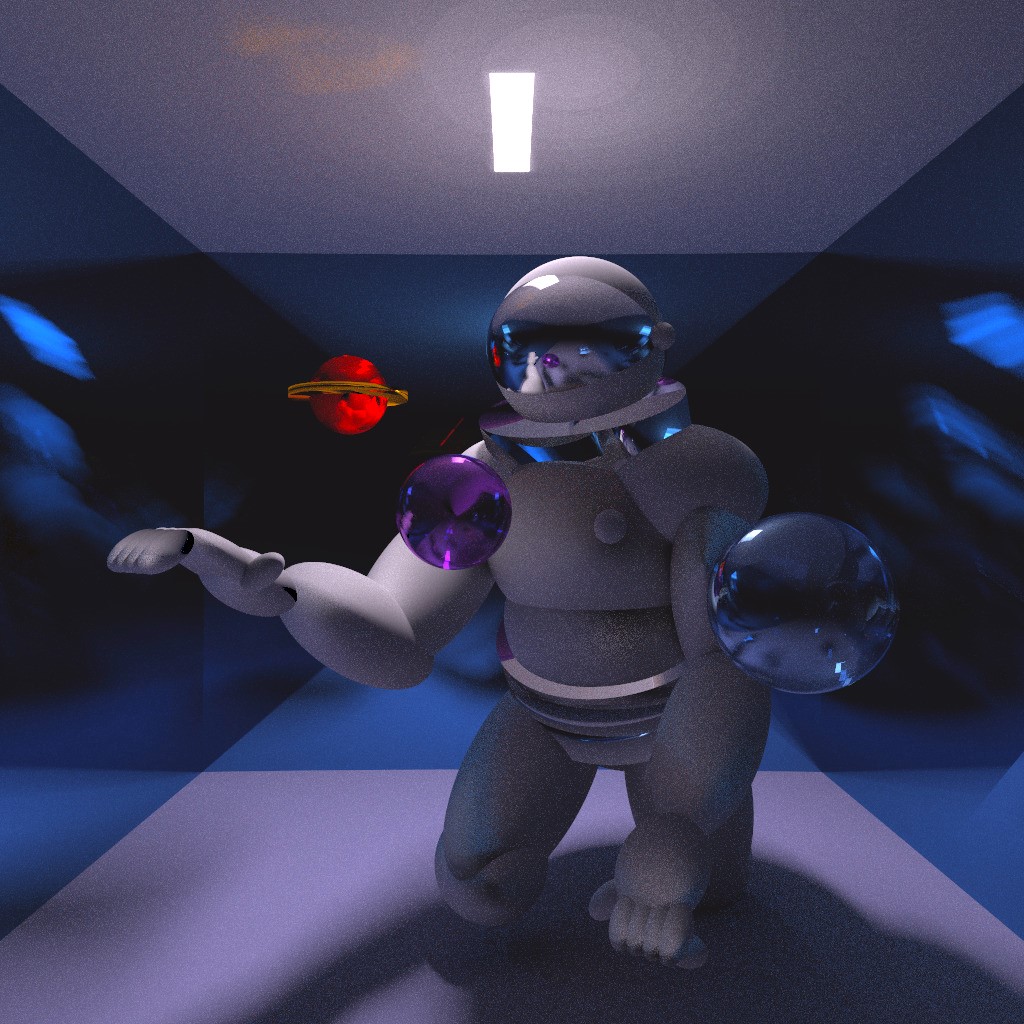

November 2022 – Path Tracing

- Pinhole model

- Affine Transforms

- Homogenous Projection Matrices

- Forward and Whitted Ray Tracing

- Area Light Sources

- Antialiasing

- Texture Mapping

- Photon Mapping

- Depth of Field

- Path Tracing

This project was a bit different than the other ones I’ve listed out here. I’ve always had a passion for computer graphics and have been curious as to how images are regenerated and

rendered through algorithms. This whole course was based on the idea of rendering images from scratch essentially as we didn’t use anything else but base C code.

Before this project I had also learned about ray tracing and how we set up 2-dimensional and 3-dimensional spaces. I was able to implement explicit light sampling and importance

sampling of rays to optimize time spent rendering the images.